Historical Origins

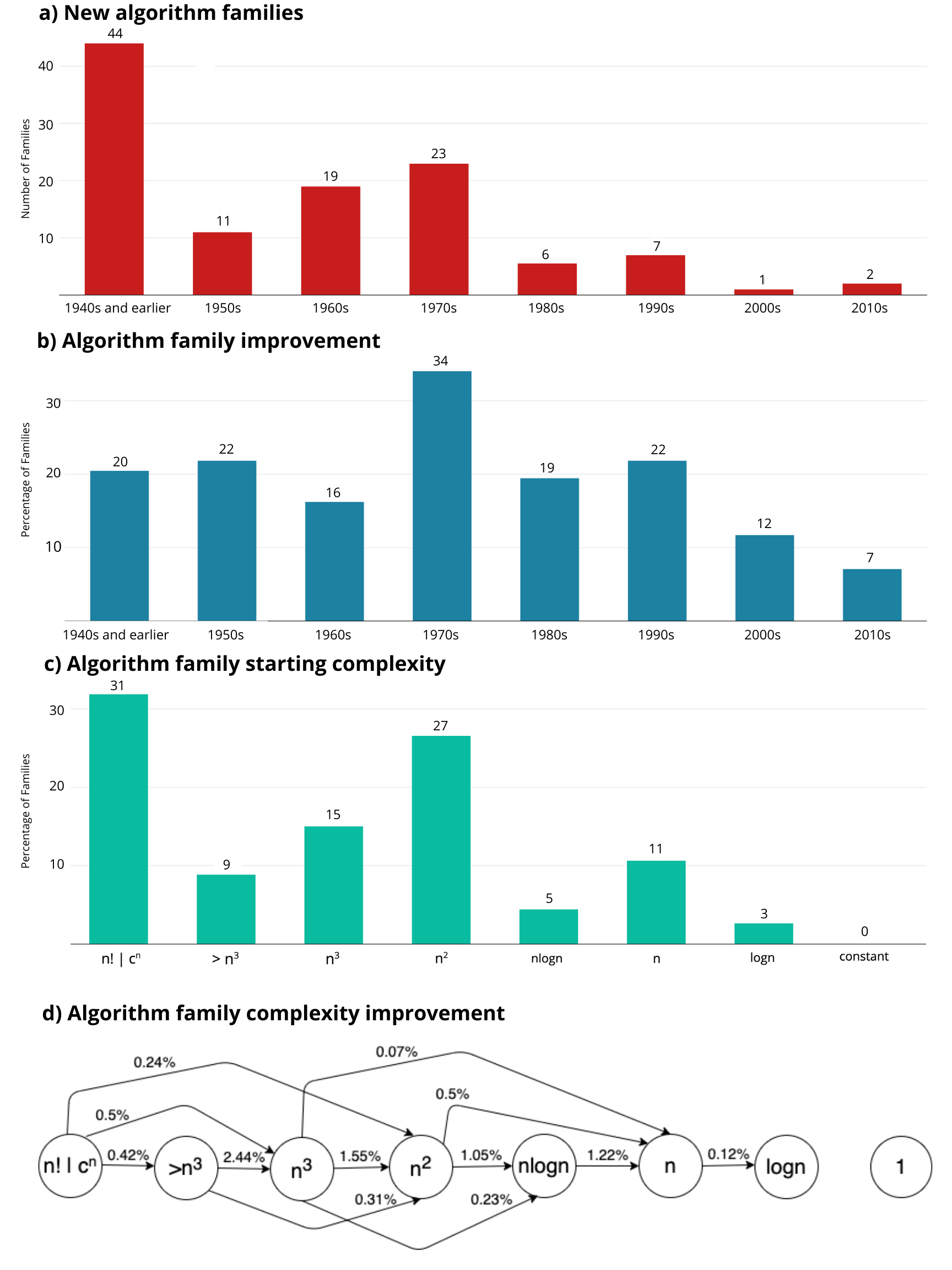

Panel (a) shows the timing for when the first algorithm in each family appeared, often as a brute-force implementation (straightforward, but computationally inefficient) and (b) shows the share of algorithms in each decade where asymptotic time complexity improved. For example, in the 1970s, 23 new algorithm families were discovered and 34% of all the previously-discovered algorithm families were improved upon. In later decades, these rates of discovery and improvement fell, indicating a slowdown in progress on these types of algorithms. It is unclear exactly what caused this. One possibility is that some algorithms were already theoretically optimal, so further progress was impossible. Another possibility is that there are decreasing marginal returns to algorithmic innovation because the easy-to-catch innovations have already been fished-out and what remains is more difficult to find or provides smaller gains. The increased importance of approximate algorithms may also be an explanation if approximate algorithms have drawn away researcher attention (although the causality could also run in the opposite direction, with slower algorithmic progress pushing researchers into approximation).

Panels (c) and (d), respectively, show the distribution of time complexity classes for algorithms when they were first discovered, and the probabilities that algorithms in one class transition into another because of an algorithmic improvement. For example, time complexity of O(n^2) indicates that as the size of the input n grows, there exists a function Cn^2 (for some value of C) that upper-bounds the number of operations required. Asymptotic time complexity is useful shorthand for discussing algorithms because, for a sufficiently large value of $n$, an algorithm with a higher asymptotic complexity will always require more steps to run. Later in the paper we show that, in general, little information is lost by our simplification to using asymptotic complexity.

Panel (c) shows that, at discovery, 31% of algorithm families belong to the exponential category (denoted n!|c^n) --- meaning that they take at least exponentially more operations as the input size grows. For these algorithms, including the famous Traveling Salesman problem, the amount of computation grows so fast that it is often infeasible (even on a modern computer) to compute problems of size n=100. Another 50% of algorithm families begin with polynomial-time that is quadratic or higher, while 19% have asymptotic complexities of nlogn or better.

Panel (d) shows that there is considerable movement of algorithms between complexity classes as algorithm designers find more efficient ways of implementing them. For example, on average from 1940 to 2019, algorithms with complexity O(n^2) transitioned to complexity O(n) with a probability of 0.5% per year, as calculated using equation. Of particular note in (d) are the transitions from factorial or exponential time (n! | c^n) to polynomial times. These improvements can have profound effects, making algorithms that were previously infeasible for any significant-sized problem possible for large data sets.